What are Services?

Services are a fundamental part of the Kubernetes networking model and are used to expose pods and deployments to the network, allowing them to communicate with each other and with external clients by enabling network access to a set of Pods in the Kubernetes cluster, by defining a logical set of the Pods and a policy to access them such as IP, ports, protocol, etc.

In Kubernetes, a Service is an abstraction that defines a logical set of pods and a policy by which to access them. It provides a stable IP address and DNS name, and can load balance traffic between pods and are a key component of Kubernetes networking and is used to expose applications running in a cluster to the network.

It is important to know that the lifecycle of a pod and service are not connected, so even if the pod dies the IP address and service will be running.

Basic Configuration of a Kubernetes Service

Services can be configured in two ways, With a selector or without a selector;

without selector:

apiVersion: v1

kind: Service

metadata:

name: My_Service_without_selector

spec:

ports:

- port: 8080

targetPort: 31060

Service With Selector:

A Service in Kubernetes can be configured with a selector to specify which pods it should expose to the network. A selector is a key-value pair that is used to identify pods in the cluster. The selector is defined in the Service's YAML file and is used by the Service to find the pods that it should expose to the network.

Here is an example of how to define a Service with a selector:

apiVersion: v1

kind: Service

metadata:

name: My_Service_with_selector

spec:

selector:

app: my-app

ports:

- name: http

port: 80

targetPort: 8080

type: ClusterIP

In this example, the Service has a selector with the key "app" and the value "my-app". The Service will expose all pods that have a label with the key "app" and the value "my-app". The Service is also configured to listen on port 80 and forward traffic to port 8080 on the target pods. The Service type is set to ClusterIP, meaning that the Service will be accessible only within the cluster.

Difference between Kubernetes Service and Deployment

A deployment is responsible for keeping a set of pods running, while a service is responsible for allowing a set of pods to access the network. We could use a no-service deployment to keep a set of identical pods running in the Kubernetes cluster, where the deployment could be scaled up and down and the pods replicated. Each pod can be accessed individually via direct network requests (rather than abstracting them behind a service), but the downside is that this is difficult to track for many pods.

You could also use a service without deploying it, but we would have to build each pod individually (instead of "all at once" like a deployment), which is extremely complex, especially for a large application. Our service could then forward network requests to these pods by selecting them based on their labels.

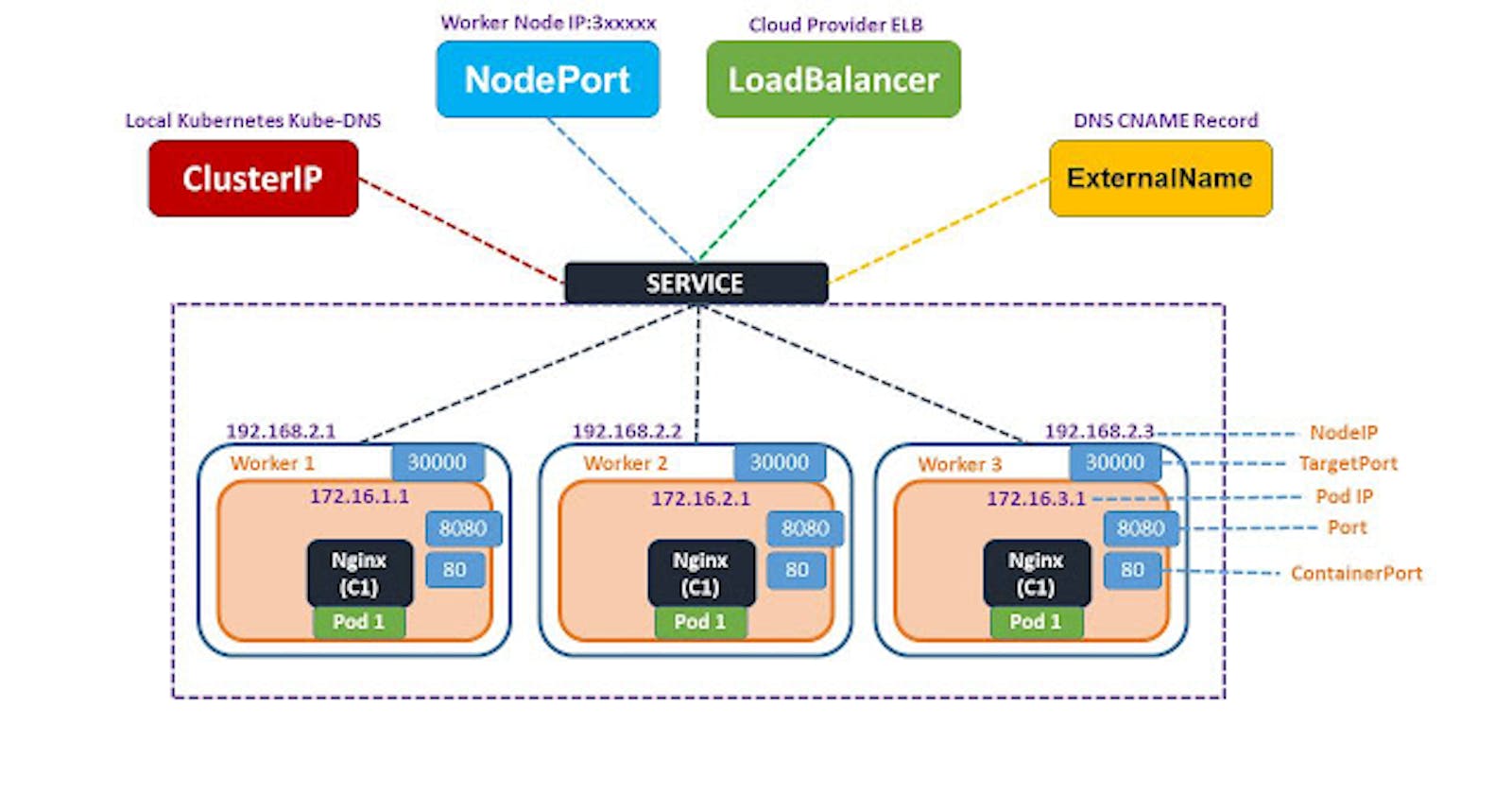

Types of Services

There are different ways to route the internet from services to your Kubernetes cluster.

ClusterIP

Cluster IP is a virtual IP, which is technically a fake IP network it is only accessible from within the cluster and is not exposed to the internet. Service can provide a unified entry address for a group of container applications with the same function, and distribute the request load to each container application in the backend through the ClusterIP.

Cluster IP is Kubernetes's internal IP and is only allocated to the service which is the entry point of the cluster. ClusterIP is more flexible than NodePort but less flexible than LoadBalancer and is commonly used for communication between services or pods within a cluster, for example, to allow one microservice to communicate with another, it also has the additional function of providing a load-balanced IP address.

Basic Yaml Configuration of a ClusterIP

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: first-dashboard

name: first-dashboard

namespace: first-dashboard

specs:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: first-dashboard

targetPort: is the port that containers are listening on.

namespace: serves as a virtual wall between multiple clusters.

kubectl describe service <YourserviceName>

The ClusterIP method is not suitable to expose production services to clients.

NodePort

This is the most primitive way of exposing services to the Internet, it is a way to expose a service to the internet by assigning it a specific port on each node in the Kubernetes cluster. This port can be accessed from outside the cluster, allowing external traffic to reach the service. The traffic is then forwarded to one of the pods backing the service.

NodePort is the simplest type of service, but it is less flexible than ClusterIP or LoadBalancer. It is usually used for development and testing purposes, or for simple use cases where external traffic only needs to reach a single service.

Basic Yaml Configuration of a NodePort

apiVersion: v1

kind: Service

metadata:

name: service-hostname

namespace: default

spec:

selector:

app: echo-hostname

type: NodePort # Type is specified as NodePort

ports:

- name: http

port: 80

targetPort: 80

nodeport: 31060 # Nodeport is specified

protocol: TCP

spec: Exposes the service on a static port on each node so that we can access the service from outside the cluster.

selector: selects pods with the label "app" set to "echo-hostname"

type: NodePort - makes service available to network requests from an external client

- name: HTTP - forwards requests to pods with the label of their value.

targetPort: this is the port that containers are listening on.

nodeport: this is the port number exposed internally in the cluster.

Note: We need to specify the port that we want to open for the traffic in the NodePort or Kubernetes will assign it randomly.

Downsides of a Nodeport:

You can only expose only one single server per port

You can only use ports in the range 30000-32767

NodePort is not recommended to be used in production to expose services

Load Balancer:

Load Balancing is the method by which we can distribute network traffic or client’s request to multiple servers as the name specifies, to balance the load. It is a critical strategy and should be accurately and properly set up otherwise, clients cannot access the servers even when all servers are working fine.

A Load balancer is the standard way of exposing services to the internet, It sits in between the user, or ‘client’, and the server cluster and distributes all the requests from users (like video, text, application data, or image) across all servers.

In simple words, here’s what happens:

Traffic comes to your application or site

A load balancer distributes the traffic across appropriate server nodes

The node receives the request and delivers a response to the user.

Basic Yaml Configuration of a Load Balancer

apiVersion: v1

kind: Service

metadata:

name: api

spec:

type: LoadBalancer # Type is declared as a load balancer

ports:

- protocol: TCP

port: 80

targetPort: 9376

selector:

app: api

type: LoadBalancer - this tells the cloud service to create a load balancer service for your Kubernetes cluster.

targetPort: this is the port that containers are listening on.

- protocol: TCP: This specifies the pattern in which the clusters communicate with one another.

When you've declared the service type in your YAML file as a load balancer and uploaded it to the cloud where you deployed your Kubernetes, the cloud controller manager creates a Load balancer for your applications.

When to use a load balancer when you want;

to expose service directly

to send any type of traffic, like, HTTP, TCP, UDP, Websockets etc.

What are the downsides of a Load balancer

- It can be very expensive because you need to create a service of the type Load balancer for each service you want to expose.